Overview

UCSF is committed to ensuring AI is used safely and responsibly, upholding the principles of trustworthy AI in health care and research.

What is Trustworthy AI

Trustworthy AI (TAI) is a framework to mitigate the various forms of risk arising from AI. Created by the US Department of Health and Human Services in 2021, the framework offers detailed guidance to ensure that health-related AI is implemented in a way that is ethical, effective and secure.

Guidelines for Developing or Evaluating AI

If you are developing or evaluating AI tools for UCSF, please carefully review the US Department of Health and Human Services Trustworthy AI Playbook and its recommendations for applying Trustworthy AI (TAI) principles across the AI lifecycle.

Any evaluation or pilot of AI for use at UCSF Health requires review by the Health AI Oversight committee. The process is structured around TAI, and detailed reviews are applied prior to: integrating AI applications with UCSF data, piloting AI applications with patients, and deploying AI in the health system.

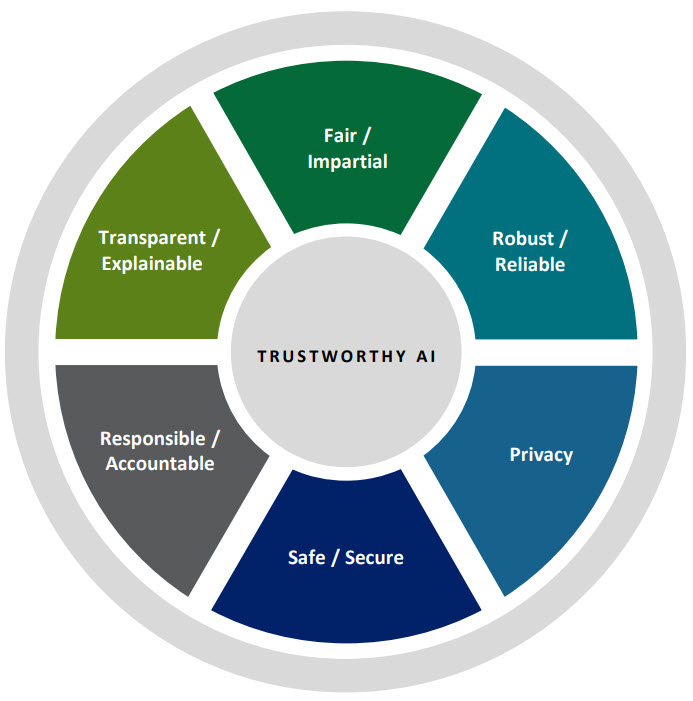

Trustworthy AI has six major principles: Fair/Impartial, Robust/Reliable, Transparent/Explainable, Responsible/Accountable, Privacy and Safe/Secure.

-

Fair / Impartial

AI applications should include checks from internal and external stakeholders to help ensure equitable application across all participants. Data will be assessed to ensure that they meet established fairness requirements, are representative of target populations, and have been corrected for data biases.

Example: An AI-based tool used to help public health experts flag geographic areas for targeted outreach should be trained on data that is representative of the target population so it performs well for all sub-groups.

-

Robust / Reliable

AI systems should have the ability to produce accurate and reliable outputs consistent with the original design and to learn from humans and other systems. Data will be assessed for accuracy, data drift, and data contamination.

Example: If new research comes out about the relationship between biomarkers and brain deterioration, an AI model that predicts brain deterioration should be retrained to reflect the updated research.

-

Transparent / Explainable

All relevant individuals should be able to understand how AI systems make decisions and how data is being used; algorithms should be open to inspection. Relevant stakeholders will need to be consulted to ensure there is effective communication with all groups impacted by the AI system.

Example: If an organization uses AI to match patients to clinical trials, the organization needs to be able to explain the solution’s decision-making process to patients, providers and researchers.

-

Responsible / Accountable

Policies should outline governance and who is held responsible for all aspects of the AI solution (e.g. initiation, development, outputs, decommissioning.) There should be people responsible for continuously monitoring against drift, bias, or failure, and people should be able to retrace how an AI system arrived at a certain recommendation or solution.

Example: If stakeholders observe that an AI pharmacy benefits processing tool used to aid pharmacists is inappropriately accessing system data, they should be able to quickly identify the human custodian to resolve the issue.

-

Privacy

Privacy for all Individuals, groups, or entities should be respected. Peoples’ data should not be used beyond an AI tool’s intended and stated use. In addition, data used by an AI tool must be approved by a data owner or steward.

Example: A research team is studying the use of mobile technology to track symptoms of Parkinson’s disease. If a team uses the mobile data to build an AI model that provides tailored recommendations to users, they first need to obtain consent from patients.

-

Safe / Secure

AI systems should be protected from risks that may directly or indirectly cause physical and/or digital harm to any individual, group or entity. Security risks should be assessed for level of risk, potential impacts and what controls are in place to mitigate vulnerabilities.

Example: An NLP-based AI solution that interprets handwritten medical records needs to have data encryption, user authentication and other applicable security controls to prevent hackers from stealing records.

As the Trustworthy AI Guidelines make clear, every application of AI involves tradeoffs across these dimensions. The key to an evaluation of trustworthiness is identifying risks and developing strategies to mitigate them.

Addressing Bias & Explainability

Bias in data and AI algorithms can increase healthcare disparities and impact patient safety. The Trustworthy AI Guidelines offer tips for identifying and addressing bias; these are crucial to ethical applications of AI in healthcare:

The Guidelines also address explainability and accuracy, noting that the balance between these competing demands is often use-case dependent:

The US National Institute for Standards and Technology (NIST) has published two guidelines which align with TAI principles and which help build trust and confidence in AI:

Anyone developing or evaluating AI should review the principles outlined in these guidelines and ensure they are applied to AI designs and implementation plans.

Additional Guidelines for Safe & Ethical AI

The Trustworthy AI (TAI) framework aligns with the "FAVES" principles outlined by the federal government, that health care AI be Fair, Appropriate, Valid, Effective and Safe. It also supports the practical implementation of the Principles for AI Development, Deployment and Use, published by the American Medical Association.

TAI is similar to other published recommendations for safe and ethical AI development and deployment:

- The UC Office of the President (UCOP) Recommendations to Guide the University of California's Artificial Intelligence Strategy

- US National Institutes of Standards and Technology (NIST) AI Risk Management Framework

- The European Union Guidelines on Ethics in Artificial Intelligence

- The Coalition for Health AI, of which UCSF is a member, offers its Blueprint for Trustworthy AI Implementation Guidance and Assurance for Healthcare